As artificial intelligence advances, connecting these technologies with ethical reasoning

becomes increasingly critical.

Can generative AI development services

create systems capable of moral judgment? Let’s explore!

The Need for Ethical AI

Recent strides in AI

have brought tremendous benefits, yet also risks from improperly designed

systems.

As capabilities grow

more powerful, ensuring AI behaves ethically is crucial.

Generative AI development services must prioritize not just capabilities, but also safety and

social impacts.

AI should remain

under meaningful human direction aligned with moral values.

Otherwise, unchecked

AI could abuse privacy, automate harm, entrench biases, manipulate people at

scale, and exaggerate societal divisions.

Thoughtful oversight

and design principles are needed to avoid misuse.

By grounding AI in

ethics, generative AI development services can steer these transformative

technologies toward benefitting humanity.

But what framework

should guide the moral reasoning of AI systems? Let's explore!

Teaching Rules vs. Principles

One approach towards

ethical AI is training systems to follow codified rules and constraints

explicitly defined by developers. But rule-based programming poses challenges.

Enumerating ethical

rules that fully encompass the complexity of human morality may prove

intractable.

Nuances and edge

cases make simple directives inadequate. Strict rules allow little flexibility

in adapting principles to context.

An alternative approach

is trying to teach AI generalizable higher-order principles like honesty,

justice, prevention of harm, and respect for autonomy.

AI then deduces

situationally appropriate rules through reasoning.

This roots decisions

in conceptual values transferable across contexts, while still allowing nuanced

application.

Encoding complex

philosophies into AI logic remains deeply challenging.

Ultimately, a hybrid

framework balancing principles and rules tailored by ethical oversight may

prove most pragmatic.

But all approaches

require grappling with subjective interpretation.

Navigating Subjective Morality

Human morality

intrinsically links to subjective experience - feelings, culture, and values.

This poses challenges for programming universal ethics into AI.

Moral dilemmas often

involve conflicts between principles where a singular right answer is unclear.

For example,

truth-telling versus preventing harm. AI faces the same struggles in weighing

competing values.

Different cultures

and individuals also hold differing moral frameworks. AI could align with some

worldviews while violating others.

Programming a

universally accepted human morality may be improbable.

Generative AI

development services must grapple with whose morality to embed in AI.

And how to ensure

sophisticated AI understands real-world context when applying ethical

reasoning.

One helpful strategy

is exposing AI during training to arguments from diverse moral perspectives and

analyzing complex scenarios. This develops nuanced ethical judgment.

Overall, subjective

morality makes perfect ethical AI unattainable. But thoughtfully benchmarking

systems against human reasoning helps align values.

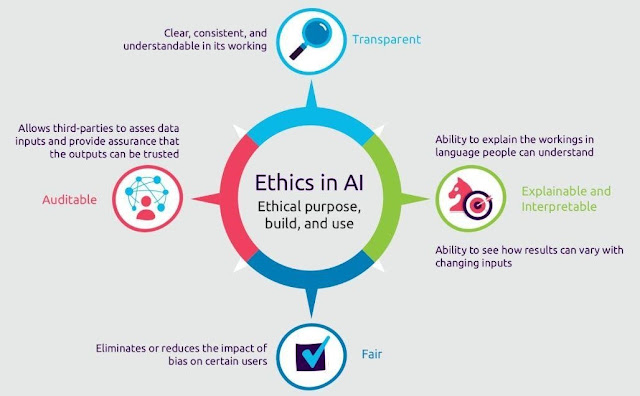

Transparency in AI Decision-Making

For users to trust in

and validate the ethics of AI systems, transparency is crucial. Generative AI

development services should ensure AI can explain its reasoning and decisions.

Some AI-like neural

networks are black boxes, obscuring logic behind predictions. While

high-performing, opacity risks inscrutable outputs that humans cannot fully

evaluate.

Generative AI

development services can employ explainable AI techniques to shed light on

model behaviours.

These include

attention layers revealing what inputs models focus on, sensitivity analysis,

and local approximation methods.

In addition, AI

interfaces should allow humans to probe justifications for actions and factor

into decisions.

This supports

meaningful oversight, identification of faults, and appealing improper conduct.

Transparency enables

crowdsourcing diverse human perspectives to continually refine AI ethics. It

builds accountability and trust in AI intended to serve all people.

The Difficulty of Value Alignment

A core challenge in

developing safe advanced AI is the value alignment problem - ensuring systems

behave according to human ethics even as intelligence exceeds our own. This

remains unsolved.

Specifying objectives

and constraints that fully encompass multifaceted human values is tremendously

difficult. Generative AI development services grapple with this limitation.

Systems optimizing

narrow goals could find holes and unintuitive ways of maximizing them despite

violations of human ethics. Goal formulations must be exhaustive.

Reinforcement

learning AI exploring novel ways to meet goals also risks diverging from

intended behaviours without sufficient safeguards. Ongoing correction by human

oversight is key.

Generative AI

development services prioritize research tackling the value alignment problem.

Advances in machine

ethics, preference learning, and AI safety will prove critical to realizing AI

for good.

Cultivating Responsible AI Institutions

Realizing ethical AI

requires building institutional cultures that prioritize the societal

implications of generative models and technologies.

Responsible

disclosure practices allow testing potential risks in constrained environments

before broad deployment. Patience focuses on safety over rush to market.

Inclusive design

teams representing diverse identities and perspectives help spot potential

harms earlier. Civil debate channels disagreements constructively.

Education in ethics

and philosophy provides cognitive frameworks for wrestling with hard tradeoffs.

Regular external audits add accountability.

Financial incentives

could encourage deliberate, values-based innovation over chasing capabilities

alone. Investments in AI safety research strengthen guardrails.

Generative AI

development services adopting such practices set ethical foundations. The

choices of leaders today shape the future.

Global Perspectives on Moral AI

Developing AI that

aligns with a diversity of cultural values and norms across the world poses

challenges. Collaboration helps.

Some regions may

emphasize communitarian ethics over individualism, or prioritize order over

personal freedoms. Different ideologies exist.

But many moral

foundations around justice, truthfulness, preventing harm, and human dignity

prove shared. There are commonalities to build upon.

International

workshops eliciting perspectives on issues like privacy, transparency, bias and

control could uncover areas of convergence to guide ethical AI.

While consensus on

every issue appears unlikely, bringing the world together around a shared

vision for moral AI systems could help safeguard humanity’s future.

Building Fairness into AI Systems

Ensuring AI systems

make fair and unbiased decisions is an important ethical priority.

Generative AI

development services must proactively address multiple facets of unfairness

that can emerge in AI models.

Mitigating Historical Biases in Data

A major source of

unfair AI outputs is biased training data reflecting historical discrimination.

Models inherit our past biases.

Data used for

training AI often reflects unequal access to opportunity correlated with race,

gender, income and other attributes. Relying blindly on such data propagates

injustice.

Generative AI

development services can pre-process datasets to remove sensitive attributes

not essential for the AI’s purpose.

Balancing

underrepresented groups in the data also helps.

In addition,

techniques like adversarial debiasing have the AI identify and correct for

statistical biases correlating predictions to demographics rather than desired

qualities.

However, truly

eliminating the influences of historical inequality in our datasets remains

challenging. Thoughtful monitoring for fairness is key.

Designing Fair Model Architectures

In addition to biases

in data, the very structure of AI models can discriminate through choices like

input features or algorithms weighing some groups differently.

For example, resume

screening AIs weighing college prestige could disadvantage those unable to

access elite institutions regardless of qualifications.

Generative AI

development services can conduct bias audits unpacking how different identity

groups fare within models. Identifying skewed impacts guides redesign.

Techniques like

adversarial debiasing during model training can also optimize fairness by

correcting demographic disparities in outputs. This bakes in equity.

However, optimizing

for multiple definitions of fairness poses tradeoffs data scientists must

navigate. There are few perfect solutions.

Ensuring Accessibility for Diverse Users

Designing AI

interfaces and experiences accessible to people of all abilities and

backgrounds also promotes fairness and inclusion.

Otherwise, groups

like those with visual, hearing or mobility impairments can face exclusion from

services reliant on narrow input modes.

Generative AI

development services can employ practices like screen reader support, captions,

and keyboard shortcuts ensuring accessibility for different needs.

User research with

diverse focus groups helps uncover accessibility barriers early in design

phases before product launch. Inclusive teams aid this effort.

Continual improvement

based on user feedback helps refine products to serve populations often

marginalized by design oversights.

Prioritizing

accessibility expands benefits to underserved groups. It also often improves

experiences for everyone through inclusive design.

Navigating Tradeoffs in Fairness Definitions

There exist many

statistical formulations of fairness with pros and cons that generative AI

development services must balance.

Individual fairness

requires similar predictions for similar individuals.

However, determining

the right similarity metric is challenging. Relying on biased or incomplete

metrics risks new harms.

Group fairness

strives for equitable outcomes between demographic groups. But which groups and

parity metrics to prioritize remains debated. These choices determine who

benefits.

Causal reasoning

identifies distortion and proxies perpetuating historic discrimination. However

relevant causal relationships are often unclear or debatable.

Tradeoffs frequently

arise between fairness definitions. For example, improving parity for one group

can worsen outcomes for others. There are rarely perfect solutions.

Humility and

transparency around limitations help fairly apply AI. Fairness remains an

ongoing process of incremental improvements, not a singular solution.

Human Oversight in Automated Systems

Reliable and

meaningful human oversight mechanisms are crucial when developing AIs that make

impactful decisions about human lives.

Generative AI

development services can implement various processes facilitating more ethical

and accountable automation.

Incorporating Human Judgement

AI systems excel at

narrow tasks but lack the generalized human reasoning required for ethically

navigating nuanced real-world complexities.

In high-stakes

decisions like parole rulings and healthcare diagnoses, the unique lived

experiences and contextual judgment of people remain irreplaceable.

By keeping humans in

the loop to review, validate, and override model recommendations when appropriate,

we direct automation towards supporting rather than replacing human expertise

and ethics.

AI can surface

insights and draft decisions for human consideration. But people should retain

authority over consequential determinations.

This helps catch

potential model errors and biases missed by developers.

It also maintains

moral agency over sensitive judgement calls versus fully automated

decision-making.

Enabling Contesting of Automated Decisions

Any parties

significantly impacted by an algorithmic decision-making system should be

empowered to contest its outcomes they believe unfair, request explanations,

and appeal resolutions through human review.

AI providers should

be transparent about how users can voice objections, which staff oversee cases,

what recourse options exist, and how concerns shape system improvements.

Constructive feedback

loops integrating user reporting into upgrading algorithms promote more ethical

automation aligned with community values.

Appeals processes also

safeguard against incorrect or biased model outputs causing unwarranted harm

until improved. Automated systems demand ongoing accountability.

Facilitating Auditing and Inspections

Independent auditing

of algorithmic systems by internal review boards and external regulators helps

verify processes align with ethical directives and intended purposes rather

than harmful misapplications.

Areas of focus

include scrutinizing training data bias, evaluating how outputs correlate to

protected characteristics, surfacing discriminatory model behaviours, and

assessing motivations driving the adoption of automation versus human services.

Generative AI

development services subject to inspection should facilitate access and

examination by reviewers upon reasonable request under appropriate

confidentiality conditions. Proprietary secrecy must not obstruct

accountability.

Regular constructive

auditing applies vital pressure for improvement.

Subjecting decisions

impacting lives to scrutiny embodies an important check on unrestrained

automation.

Empowering Workers Impacted by Automation

Where workplace

automation through AI becomes necessary due to economic realities, generative

AI development services bear an ethical obligation to support displaced workers

through the transition.

Providing Transition Training

Affected staff should

be given ample advance notice of automation initiatives along with

opportunities for new internal roles less impacted by AI or reskilling training

to aid external job seeking if preferred.

Covering Lost Wages

Transitioning workers

needing retraining to remain employed should receive supplemental wage support

making up for lost income suffered during their reskilling efforts for new

careers.

Financial Aid for Education

For workers seeking

to change fields, education stipends granting finances and leave time for

degree or certification programs create paths to new careers split from company

funding.

This empowers

families otherwise unable to independently afford career shifts.

Job Search Assistance

Personalized guidance

from career counsellors both within human resources departments and third-party

placement firms helps connect displaced staff to new opportunities matching

their strengths and interests.

Generative AI development

services automating jobs away hold a profound duty of care to those affected,

helping them transition with dignity.

Teaching AI right

from wrong remains enormously complex.

However, continuing

progress in developing ethical AI systems promises great benefits if pursued

responsibly.

Generative AI

development services must proactively address risks through research and

governance while tapping the powerful potential of AI

to better lives.

With care and wisdom,

our machine creations can empower moral progress.

But what safeguards

and design principles do you think are most crucial? Should certain

capabilities be approached with restraint? Let us know in the comments.